What Can We Learn From Twitter's Open Source Algorithm?

What we can (and can't) learn from Twitter's partial open-sourcing of its recommendation algorithm.

Credit: Colin Fraser

Authors

Area of Study

Tags

This article was originally published on Sol Messing's website.

Last Friday, Twitter released what it calls “the algorithm,” which appears to be a highly redacted, incomplete part of code that governs the “For You” home timeline ranking system. And I saw nothing to suggest the parts of the code they put in the GitHub repository weren't authentic.

It’s highly unusual for a tech company to open up a product at the core of its monetization strategy. The thinking is that the more engaging the content you show people right when they log in, the more likely they are to stick around. And the more you keep people logged in, the more they see ads. And the more data you can get to show them better ads!

Transparency, or a distraction from closing the API?

Is this a step forward for transparency as Musk and Twitter would claim? I am skeptical. You can’t learn much from this release in and of itself — you need the underlying model features, parameters, and data to really understand the algorithm. Those combine into a system that’s effectively different for everyone! So even if you had all that, you’d likely need to algorithmically audit the system to really get a handle on it.

And Twitter made it prohibitively expensive for external researchers to get that data through its API with the recent price updates ($500k/yr). So at the same time Twitter is releasing this code, it’s made it incredibly difficult for research to audit this code

What’s in the code? Gossip and Rumors

Ukraine: There were some initial reports that Twitter was downranking tweets about Ukraine. I looked at the code and can tell you those claims are wrong — Twitter has an audio-only Clubhouse clone called Spaces and that code is for that product, not ordinary tweets on the home timeline. What’s more, this is likely a label related only to crisis misinformation, as per Twitter’s Crisis Misinformation Policy.

Uh, this code looks like it *only* relates to Twitter's Spaces product. If so it is *not* used for down-ranking ordinary tweets in home timeline ranking.

— Sol Messing (@SolomonMg) April 2, 2023

It's called here with the other twitter Spaces spaces visibility models and labels: https://t.co/7n8Tl7WVlJ https://t.co/stSThUTvUe

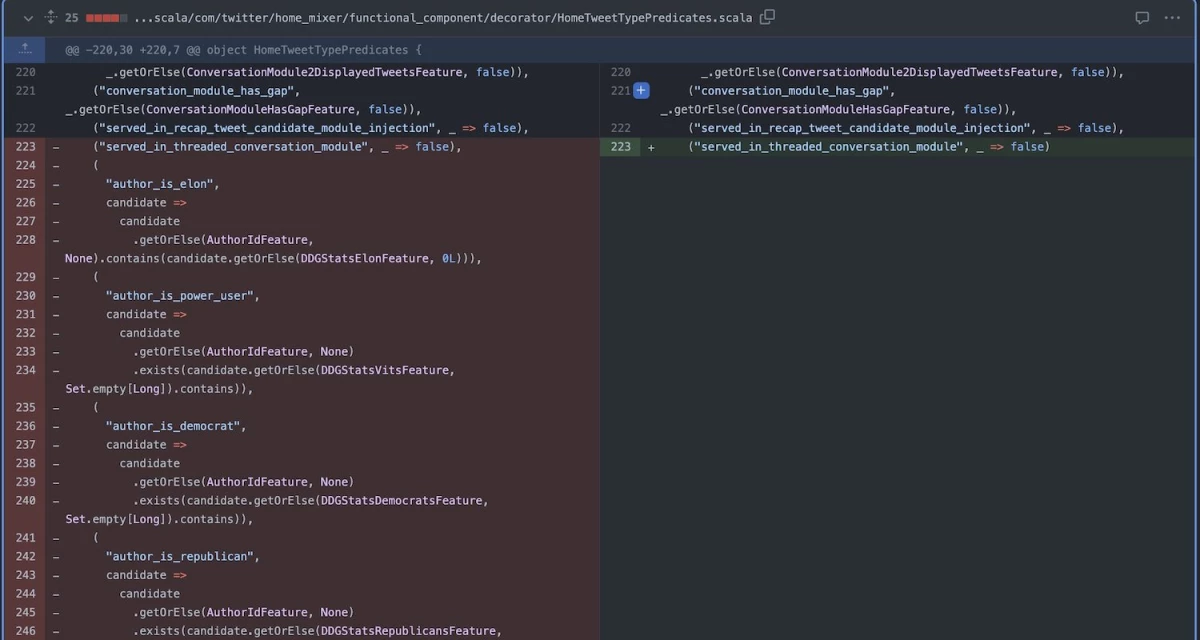

Musk Metrics: One of the most interesting things we learned from the code is that Twitter created an entire suite of metrics about Elon Musk’s personal Twitter experience. The code shows they fed those metrics to the experimentation platform (Duck Duck Goose, or DDG), which at least historically has been used to evaluate whether or not to ship products.

Twitter’s algorithm specifically labels whether the Tweet author is Elon Musk

— Jane Manchun Wong (@wongmjane) March 31, 2023

“author_is_elon”

besides the Democrat, Republican and “Power User” labelshttps://t.co/fhpBjdfifX pic.twitter.com/orCPvfMTb9

This episode is consistent with reporting that engineers are very concerned about how any features they ship affect the CEO's personal experience on Twitter. And other reporting has suggested that there may have been a Musk centric boost feature that shipped, and you would want exactly this kind of instrumentation to understand how that worked in practice.

Republican, Democrat Metrics: We also learned that Twitter is logging similar metrics for lists of prominent Democrat and Republican accounts, ostensibly to understand whether any features that they ship affect those sets of accounts equally. Now we know that conservative accounts tend to share more misinformation than liberal accounts on both Twitter and on Facebook. And, Musk has alleged that Democrats and Big Tech are colluding to enforce policy violation unequally across parties.

But if you have these "partisan equality" stats as part of your ship criteria, perhaps on equal footing with policy violation frequency, you can see how this could really affect the types of health and safety features that actually make it to the site in production.

This code was then comically removed via pull requests from Twitter. Because once you delete something on GitHub, it just goes away. Right?

"Remove stats collection code measuring how often Tweets from specific user groups are served"

— Colin Fraser (@colin_fraser) April 1, 2023

lmaohttps://t.co/rVtaR8Kphh

Twitter Blue Boost: What's more, we sort of knew that Twitter Blue users get a boost in feed ranking, but the code make it clear that it could double your score among people who don’t follow you, and quadruple it for those who do.

Not sure what I expected, but interesting that first pull request on newly open-sourced @Twitter algo (https://t.co/kAVP0zdzki) is to downweight verified user multipliers. pic.twitter.com/PfqIdVTDnk

— Caitlin Hudon (@beeonaposy) March 31, 2023

As Jonathan Stray pointed out, if this counts as a paid promotion, the FTC might require Twitter to label your tweets as ads. Now we kind of already knew this from Musk's Twitter Blue announcement, but having evidence in the code might cross a different line for the FTC.

So what about the actual algorithm? What does this say about feed ranking?

The code itself is there but it’s missing specifics — key parameters, feature sets, and model weights are absent or abstracted. And obviously the data.

The most critical thing we learned about Twitter’s ranking algorithm is probably from a readme file that former Facebook Data Scientist Jeff Allen found. If we take that at face value, a fav (Twitter like) is worth half a retweet. A reply is worth 27 retweets, and a reply with a response from a tweet's author is worth a whopping 75 retweets!

According to the Heavy Ranker readme, it looks like this is the "For you" feed ranking formula is

— Jeff Allen (@jeff4llen) March 31, 2023

Each "is_X" is a predicted probability the user will take that action on the Tweet.

Replies are the most important signal. Very similar to MSI for FB.https://t.co/Bmv7qg4voc pic.twitter.com/lWfaUboT6q

Now it’s not quite that simple — what about when a tweet is first posted and there’s no data? Twitter’s deep learning system (in the heavy ranker) will do some heavy lifting and predict the likelihood of each of these actions based on the tweet author, their network, any initial engagements, the tweet text, and thousands of signals and embeddings.

Of course, what happens in the first few minutes when a tweet is posted deeply shapes who sees and engages with it downstream in the future.

[And the way this is implemented in practice is that the model handles all cases, but as you get more and more real time data on a tweet, those real time features dominate everything else and push those probabilities close to 1, see discussion here.]

Now I should point out that there are some spammy accounts claiming to have found ranking parameters in the code. They’re wrong, those are used to retrieve tweets from your network for candidate generation only. Lucene is an open source search tool.

Uh, this is for search query results, not home timeline ranking.

— Sol Messing (@SolomonMg) April 2, 2023

See the thing that says "luceneScoreParams"??

Lucene is a search library: https://t.co/993crKVHJi https://t.co/AdGtjCbOlx

I should point out, however, that some of the "Earlybird" code was at one point used in timeline ranking, and it appears that it may be used in cr-mixer, which is used in candidate generation for out-of-network tweets.

Interestingly, Twitter appears to remove competitor URLs, perhaps only for tweets that are outside of network (you don’t follow the author).

What else goes into the "the Algorithm?"

What gets ranked in the first place? The other piece here is the "TikTok" part of the ranking algorithm, which is also incomplete without the models/data/parameters/etc. What I mean is the code that takes content from across the platform and says "I’m going to put this into your queue for the heavy ranker to sort out."

Now on Twitter often that historically meant tweets posted by or replied to by accounts you follow. But, Twitter realized it could find a lot more content for that heavy ranker magic.

There’s a complex system that inserts tweets into your queue for ranking. This is called candidate generation in the "recommendation system" subfield of applied computing.

If you follow a lot of people on Twitter like me, about half of the candidate tweets in Twitter’s ranked "for you" timeline at any given time are from people you follow.

Now, if you don’t follow a ton of people, or if you have a new account, you can run out of these tweets, and then Twitter will try to find additional candidates so that you have ranked content. If so, it means that this system is going to govern what goes in your home timeline feed like TikTok — gathering content it predicts you’ll like from across the platform.

This takes place in cr-mixer, and although some of the high level function calls are there, much of the code and the models appear to be missing, and many files come with this warning at the top: "This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository."

Twitter seems to have made some of the systems underlying candidate generation public, including its SimCluster model.

BTW, I’d like to give a shout out to Vicki Boykis, and Igor Brigadir who are doing amazing work to map out the codebase and unearth exactly what’s missing and what’s not.

Trust and Safety

A lot of the code related to Trust and Safety is missing, presumably to prevent bad actors from learning too much and gaming those systems. However, there do seem to be some specifics about the kinds of things Twitter considers borderline or violating that I don’t think were previously public. There are a bunch of safety parameters in the code, some of which are in Twitter’s policy documents, but some are not.

There are entries like "HighCryptospamScore" that appear in the code, which may give scammers hints about how to craft tweets to get around detection systems. The same is true for code that contains links to "UntrustedUrl" and "TweetContainsHatefulConductSlur" for low, medium, and high severity.

There’s also a reference to a "Do Not Amplify" parameter in the code, which was discussed in the Twitter files but seems not to be publicly documented in its policies. There are entries like "AgathaSpam," which refers to a propriety embedding used across the codebase. Twitter also has a bunch of visibility rules hardcoded in Scala that might be useful to bad actors trying to game the system, outlining what rules are in play for all tweets, new users, user mentions, liked tweets, realtime spam detection, etc. Finally, some of the consequences for those violations are spelled out in Scala as well.

Of course, it’s really hard to know with certainty that any of this wasn’t in public somehow before this release.