- Home /

- Research /

- Academic Research /

- Political Psychology in the Digital (mis)Information age: A Model of News Belief and Sharing

Political Psychology in the Digital (mis)Information age: A Model of News Belief and Sharing

To better understand why misinformation has become so pervasive, we examine a list of psychological factors that contribute to why people spread “fake news.” We then propose strategies that use those same factors to mitigate and curb the spread of misinformation.

Citation

Van Bavel, Jay J., Elizabeth A. Harris, Philip Pärnamets, Steve Rathje, Kimberly C. Doell, and Joshua A. Tucker. “Political Psychology in the Digital (Mis)Information Age: A Model of News Belief and Sharing.” Social Issues and Policy Review 15, no. 1 (2021): 84–113. https://doi.org/10.1111/sipr.12077

Date Posted

Jan 22, 2021

Authors

- Jay J. Van Bavel,

- Elizabeth A. Harris,

- Philip Pärnamets,

- Steve Rathje,

- Kimberly C. Doell,

- Joshua A. Tucker

Area of Study

Tags

Abstract

The spread of misinformation, including “fake news,” propaganda, and conspiracy theories, represents a serious threat to society, as it has the potential to alter beliefs, behavior, and policy. Research is beginning to disentangle how and why misinformation is spread and identify processes that contribute to this social problem. We propose an integrative model to understand the social, political, and cognitive psychology risk factors that underlie the spread of misinformation and highlight strategies that might be effective in mitigating this problem. However, the spread of misinformation is a rapidly growing and evolving problem; thus scholars need to identify and test novel solutions, and work with policymakers to evaluate and deploy these solutions. Hence, we provide a roadmap for future research to identify where scholars should invest their energy in order to have the greatest overall impact.

Background

Misinformation poses a serious threat to our democracy, as it undermines trust in the important institutions of our society, and makes it harder for citizens to make informed choices and hold their politicians accountable. The issue of misinformation is compounded by the rapid growth of social media. Over 3.6 billion people now use social media around the world, and for many, this is the main source of their news. Past studies have shown that online misinformation spreads more significantly than the truth, and this is particularly true for political misinformation.

Study

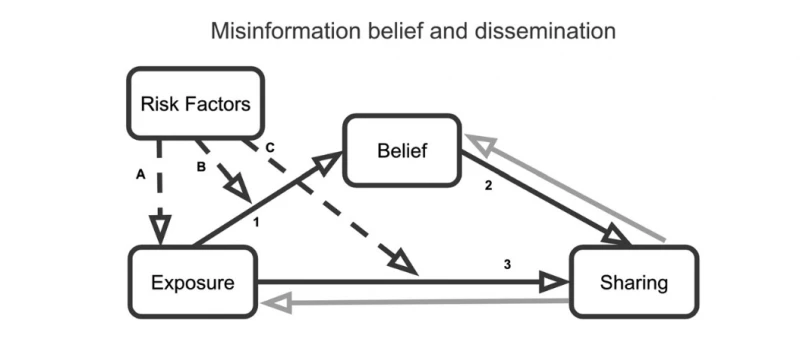

To better understand this phenomenon, we propose a novel theoretical model to explain the psychological processes underlying the risk factors, belief, exposure, and sharing of misinformation. Our model proposes to link exposure to misinformation to both sharing and believing, but notes the point that these are two separate processes and one can happen without the other. Thus if we are interested in who shares misinformation, we should think about pathways to sharing that work both through believing and through not believing. We then link existing social psychological and political science concepts — including partisan bias, polarization, political ideology, cognitive style, memory, morality, and emotion — to potential links between each of these three factors: exposure, belief, and sharing. We argue that the model — and its links to social psychological and political science concepts — can then be used to design potential interventions to mitigate the spread of and belief in misinformation.

Results

Our four proposed solutions are fact-checking; equipping people with psychological resources to better spot fake news; eliminating bad actors; and fixing the incentive structures that promote fake news. Traditionally, fact-checking has been the most used intervention to halt the spread of misinformation. However, this method is much less effective in political contexts, where people have strong prior beliefs – people become sensitive and skeptical of fact-checks from outgroup sources. Inoculation, or media literacy-based interventions, can also reduce susceptibility to misinformation for individuals by giving them the tools to better spot fake news when they see it. Additionally, encouraging reflexive thinking, or giving users a brief, “accuracy nudge” before posting, has also proven potentially effective. Lastly, social media platforms can do a lot by changing their internal incentive structure or algorithms, to decentivize the creation and sharing of false claims, while encouraging the detection of misinformation. Enforcement regulations, to remove bots and bad actors from platforms, would go a long way to reduce misinformation as well. All of these solutions require a multifaceted approach.