- Home /

- Impact /

- News & Commentary /

- How Pro-Regime Bots are Used in Russia to Demobilize Opposition and Manipulate Public Perception

How Pro-Regime Bots are Used in Russia to Demobilize Opposition and Manipulate Public Perception

In a new paper, we investigate how pro-regime bots employ a variety of tactics to prevent, suppress, or react to offline and online opposition activities in Russia, finding online activities produce stronger reactions than offline protests.

Credit: Wikimedia

Area of Study

As the Russian-Ukraine crisis continues to unfold, reports indicate the Kremlin is ramping up its propaganda campaign, using social and other online media to spread disinformation. The survival of non-democratic regimes largely depend on their ability to manage the information environment in this way and, since its launch, social media has become a key ingredient to expand autocrats’ toolkits.

There’s abundant research showing how non-democratic regimes like Russia use human troll accounts on social media to sow distrust and disseminate deceptive information. But research on how such regimes use bots — algorithmically controlled social media accounts — in the context of domestic politics is scarce.

In a new paper, published today online in the American Political Science Review, researchers from NYU’s Center for Social Media and Politics (CSMaP) investigate how pro-regime bots employ a variety of tactics to prevent, suppress, or react to offline and online opposition activities in Russia, finding online activities produce stronger reactions.

Why deploy bots in Russia?

“Automated bots are cheap, hard to trace, and can be deployed at a very large scale,” said CSMaP Co-Director Joshua A. Tucker, a co-author of the study. “This makes them an easy way for regimes to inflate support and manipulate the information environment, and for other political actors to promote certain policies and signal loyalty to the regime.”

Tension between incumbents and the opposition is a — if not the — key component of domestic politics in competitive authoritarian regimes. To mitigate the opposition, regimes often silence adverse information and signal the high cost of engaging in opposition activities.

In our study, we analyze different tactics pro-regime bots could use to achieve these goals when responding to both offline or online protests. Specifically, we identify four potential strategies bots could employ:

Increasing the volume of information online to drown out the protest-related agenda (volume).

Amplifying a more diverse set of news to distract users (retweet diversity).

Posting more pro-Vladimir Putin content to inflate support for the regime (cheerleading).

Trolling and harassing opposition leaders to increase the expected cost of supporting rebel activities (negative campaigning).

From these strategies, we develop a set of hypotheses seeking to answer this question: Are bots primarily used as yet another tool to demobilize citizens when the opposition is trying to bring people onto the streets — or are they mainly employed as an online agenda control, or gatekeeping, mechanism tailored to regulating information flows on social media?

We empirically test these hypotheses using a large collection of data on the activity of Russian Twitter bots from 2015 to 2018.

What we found

Pro-government bots tweet more and retweet a more diverse set of accounts when there are large street protests or increase in online opposition activity — and online activities produce stronger reactions, the study finds.

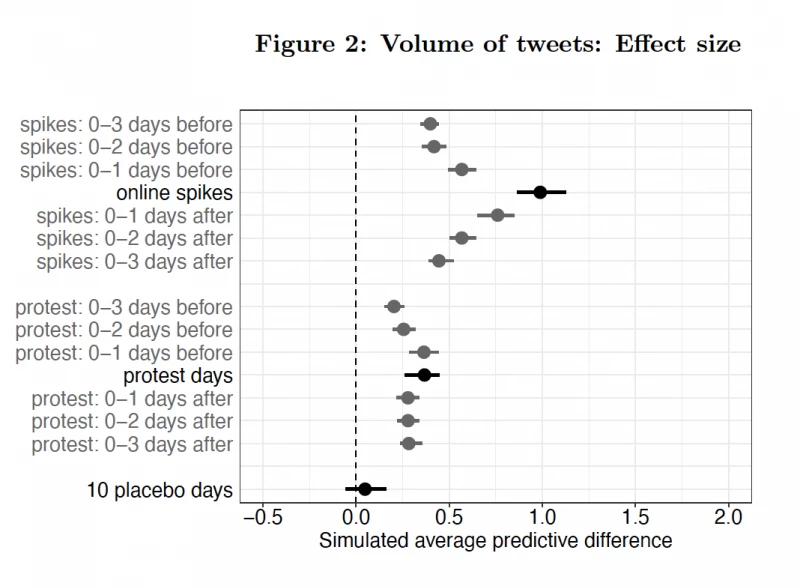

Figure 2 demonstrates the increase in pro-regime tweets in response to offline protests and online opposition activity. The latter effect is 2.7 times as strong as the former.

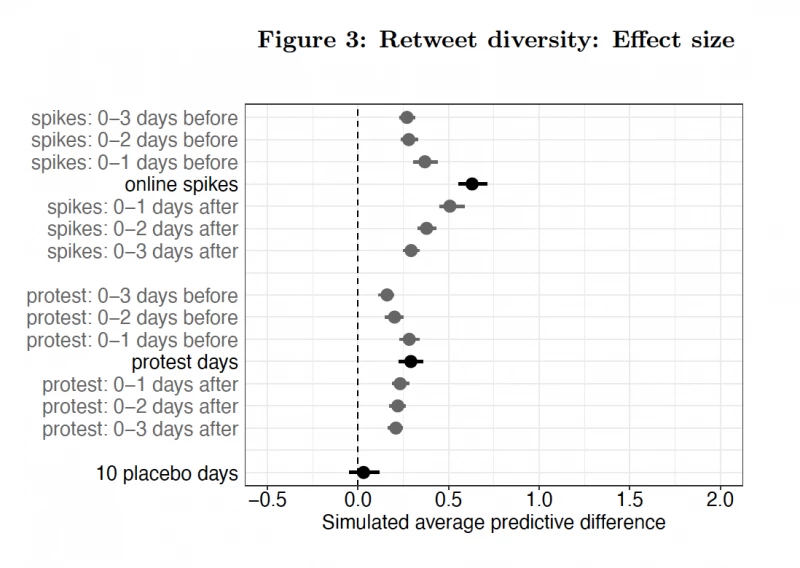

Figure 3 illustrates the increase in pro-regime bots retweeting a wider range of accounts in response to offline protests and online opposition activity. The latter effect is around twice as large as the former.

Results for the other two strategies — cheerleading and negative campaigning — are mixed. Pro-government bots do not increase their tweeting about Putin during mass political protests, and there’s a limited (though statistically significant) increase in bot activity during online protests. For negative campaigning, there is a slight increase in activity in response to offline protests, and no increase in response to online activity.

The effects of these increases are relatively small. Each pro-regime bot posts an average of one additional tweet, and retweets half an additional tweet, during online opposition mobilization, for example. However, by acting en masse, bots can produce substantial shifts in the volume and sentiment of political tweets.

Although these findings are limited to the use of pro-government Twitter bots in Russia, and further research is needed to better understand how they could apply on other platforms or in other nations, uncovering these strategies remains highly important.

“We know that Russia is heavily invested in using social media as part of its broader propaganda strategy. Understanding how bots are used in the context of Russian domestic politics is therefore an important piece in a larger puzzle,” said Tucker. “These results demonstrate how bots — an easy-to-use and cheap technology — are yet another tool that can be employed by non-democratic regimes in the modern digital information era.”

The paper’s other authors were Denis Stukal, a Faculty Research Affiliate at CSMaP and Associate Professor at Russia’s HSE University; Sergey Sanovich, a CSMaP Postdoctoral Research Affiliate and Postdoctoral Research Associate at the Center for Information Technology Policy at Princeton University; and Richard Bonneau, a professor in NYU’s Department of Biology and Courant Institute of Mathematical Science and co-director of CSMaP (currently on leave).

***

Methodology

To conduct the analysis, we relied on approximately 32 million tweets about Russian politics in Russian posted in 2015-2018 by about 1.4 million Twitter users. We used a machine-learning algorithm to detect 1,516 pro-regime bots with over 1 million tweets in our collection.

To identify offline protests, we used a database of the Integrated Crisis Early Warning System project to extract information on all protests in Russia in 2015-2018. We manually validated the results by searching for mentions of protest events in Russian and English-language mass media, and limited our attention to protests with at least 1,000 participants.

To identify periods of increased online opposition activity, we counted the total number of tweets from 15 prominent opposition leaders or related organizations. We defined a spike as a day with at least five times as many tweets from opposition accounts as they posted on a median day a month before and after that day.