Do Twitter Warning Labels Work?

Twitter put warning labels on hundreds of thousands of tweets. Without a hard block, tweets continue to spread — especially tweets by President Trump.

Credit: Trusted Reviews

Area of Study

Tags

This article was originally published at The Washington Post.

Read more about warning label interventions on Twitter.

Recently, Twitter CEO Jack Dorsey and Facebook CEO Mark Zuckerberg testified before the Senate Judiciary Committee on misinformation and the 2020 election. Republicans and Democrats alike grilled the pair for more than four hours, especially on how they moderate what can be shared on their platforms and how they enforce policy violations.

Senators especially asked Dorsey about Twitter’s decision to label President Trump’s tweets as false or misleading. Republicans accused Twitter of bias against conservatives, while Democrats blamed it for allowing misinformation’s spread online.

What did Twitter do — and did its efforts successfully stop misinformation from spreading? We investigated — and found that while blocking tweets from spreading was successful, tweets with warning labels continued to spread, although less on average than tweets without warning labels. However, tweets by Trump with warning labels spread more than tweets by the president without warnings.

How we did our research

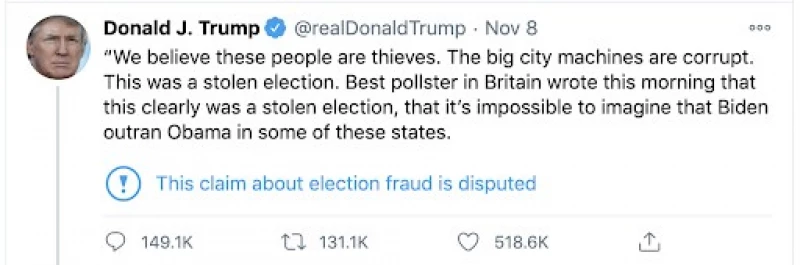

Just before the hearings, Twitter announced that it had labeled 300,000 election-related tweets as disputed and potentially misleading, but did not remove these tweets or block them from spreading. We call this a “soft intervention.” Twitter reported a harsher response to 456 tweets, which it labeled with a warning message while blocking others from retweeting, replying to, or “liking” the tweet. We call this a “hard intervention.” You can see an example of each below.

To examine how these approaches worked, each day from Nov. 1 through Nov. 16 we collected all tweets originally posted by members of Congress; 2020 congressional candidates; high-profile members of the executive branch, such as the president, vice president and Cabinet members; and some national political organizations, such as the Democratic National Committee and Republican National Committee accounts. Altogether, we collected 36,218 tweets from the 3,482 accounts in our analysis.

Among these, 160 tweets received a soft intervention and 44 tweets received a hard intervention. Tweets from Trump received the greatest number of soft interventions with 68, and the second-greatest number of hard interventions, 16. Rep.-elect Marjorie Taylor Greene (R-Ga.) led in hard interventions with 23. Because of the large number of labeled tweets and high engagement with Trump’s account, we examine the impact of the labels separately for tweets by Trump.

For each of 36,218 tweets in our sample, we then counted the number of retweets of these labeled tweets.

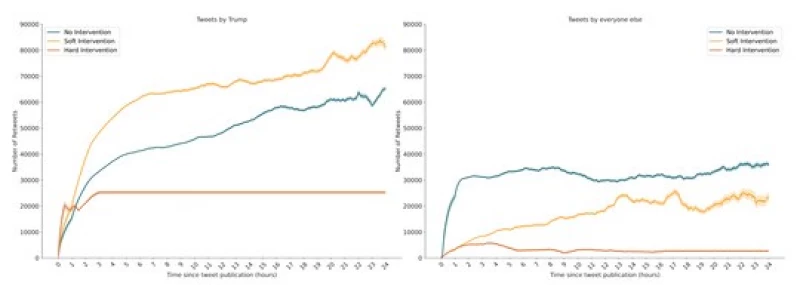

In the figures above, you see the average number of retweets for each original tweet in our sample, grouped by whether Twitter left them alone, added a warning label (soft intervention) or blocked its spread (hard intervention). The figure on the right shows the reach of Trump’s tweets by category; the figure on the left shows the rest of our sample excluding Trump. As long as people are not deleting their own retweets — which perhaps some might have done after learning about the label — this number should go up over time.

The blue line shows how a tweet spreads when Twitter left it alone. As you can see, for the average politician, most of the spread of an unlabeled tweet occurs in the first two hours.

The orange line shows tweets that Twitter blocked from spreading. Here too, retweets increased rapidly at first, but that growth stopped suddenly. For the most part, Twitter’s “hard” interventions came within an hour after someone tweeted. Interestingly, we also find that for the non-Trump tweets, the average number of cumulative retweets dropped slightly, suggesting that some people deleted their retweets. The Twitter data doesn't tell us whether this happened before or after Twitter’s intervention.

In the hours after Twitter blocked a tweet’s spread, it also eliminated data about those retweets. The line flattens because we take the last known number of retweets and extend it. As a result, we’re probably overestimating the retweets because we can’t capture any subsequent deletions. However, this does not account for quote tweets due to lack of available data (see research note at end).

Trump’s tweets with soft interventions spread further and longer

The yellow line tells a markedly different story for Trump’s tweets that received a soft intervention. For these tweets, we see a similar spike after publication. However, unlike the unlabeled tweets, these messages continue to spread further and longer. Not only is the average number of cumulative retweets the highest of the three groups, but retweets continue to increase for a longer period of time.

This could be happening for one or both of two reasons. First, research finds that false information spreads further on Twitter than does factual information. These tweets could be the kinds of messages that tend to spread more on the platform. Second, the warning label could actually encourage people to engage with these tweets.

When labeling a tweet, Twitter also made it harder to retweet, adding another screen to click through before it could be spread. Even with this friction, retweets for warning-labeled messages jumped beyond those for tweets with no intervention.

For tweets from other elites that received a soft intervention, they also spread further and longer than the tweets that received a hard intervention, though less so than tweets that were unlabeled.

Are warning labels effective?

Facebook recently reported that when it labeled Trump’s misleading posts by directing users to accurate election-related information, the posts spread anyway. That would be consistent with what we found on Twitter.

To be clear, this does not mean that warning labels aren’t effective. First, it may be that Twitter labeled the most salient tweets — and users might have engaged with them even more if the platform had not applied the label.

Second, research suggests that warning labels reduce people’s willingness to believe false information; despite the spread, the label could have lowered users’ trust in what those tweets said.

Our research does suggest that hard interventions were able to quickly curb the spread of those tweets and that soft interventions were associated with less overall spread than tweets without any labels for most political elites. However, Trump’s tweets that received a soft intervention spread further and longer than tweets by Trump that received no intervention at all, despite the intervention.

To further examine the data, see our methods supplement to our analysis and our open-sourced data set, accessible on GitHub.

Research note: We did not count “quote tweets,” whereby users share the original tweet along with their own additional message, because once Twitter applies a hard intervention, it removes access to data on the number of retweets and quote tweets. Because additional retweets are prevented, we are able to assume that the number of retweets plateaus at its highest known number. However, quote tweets are still allowed after the intervention is applied, and we have limited ways to capture the true additional number of quote tweets.

Megan A. Brown is a research scientist at the New York University Center for Social Media and Politics.

Zeve Sanderson is the executive director of the New York University Center for Social Media and Politics.

Jonathan Nagler is a professor of politics at New York University and co-director of the NYU Center for Social Media and Politics

Richard Bonneau is professor of biology and computer science at New York University and co-director of the NYU Center for Social Media and Politics