It’s Not Easy for Ordinary Citizens to Identify Fake News

In 2020, even small amounts of fake news about the coronavirus can have dire consequences. Unfortunately, it seems quite difficult for people to identify false or misleading news.

Credit: Unsplash

Authors

Area of Study

Tags

This article was originally published at The Washington Post.

Fake news makes up a relatively small portion of Americans’ news consumption, research has shown. Throughout 2016, the vast majority of U.S. Facebook users did not share any fake news articles. In the month leading up to the election, fake news sources only made up roughly 1 percent of the average Twitter user’s political news on the platform.

In 2020, however, even small amounts of fake news about coronavirus can have dire consequences. The current public health crisis requires the coordinated actions of individuals — maintaining social distance, buying reasonable quantities of food and supplies, and following the latest medical advice rather than bogus cures.

This means fake covid-19 news has potential to undermine the public’s adoption of evidence-based public health responses and policies. And in the area of public health, externalities are quite meaningful: Quack cures can lead to more covid-19 cases. Disinformation can be damaging in other ways — in the United Kingdom, for instance, fake news blaming 5G wireless for coronavirus apparently led to arson attacks against cellphone towers.

How good are people at sifting out fake news? In a collaboration between the NYU Center for Social Media and Politics and the Stanford Cyber Policy Center (supported by the Hewlett Foundation), we’ve been investigating whether ordinary individuals in the United States who encounter news when it first appears online — before fact-checkers like Snopes and PolitiFact have an opportunity to issue reports about an article’s veracity — are able to identify whether articles contain true or false information.

Unfortunately, it seems quite difficult for people to identify false or misleading news, and the limited number of coronavirus news stories in our collection are no exception.

How we did our research

Over a 13-week period, our study allowed us to capture people’s assessments of fresh news articles in real time. Each day of the study, we relied on a fixed, preregistered process to select five popular articles published within the previous 24 hours.

The five articles were balanced between conservative, liberal and nonpartisan sources, as well as from mainstream news websites and from websites known to produce fake news. In total, we sent 150 total articles to 90 survey respondents each, which meant over the course of the study we received over 13,500 ratings of news stories from ordinary citizens, whom we reached via Qualtrecs. To our knowledge, no individual took the study twice; over the course of the study, we ended up with 5,408 unique respondents, who were nationally representative.

We also sent these articles separately to six independent fact-checkers, and treated their most common response — true, false/misleading, or cannot determine — for each article as the “correct’’ answer for that article.

People aren’t good at identifying false news

When shown an article that was rated “true” by the professional fact-checkers, respondents correctly identified the article as true 62 percent of the time. When the source of the true news story was a mainstream news source, respondents correctly identified the article as true 73 percent of the time.

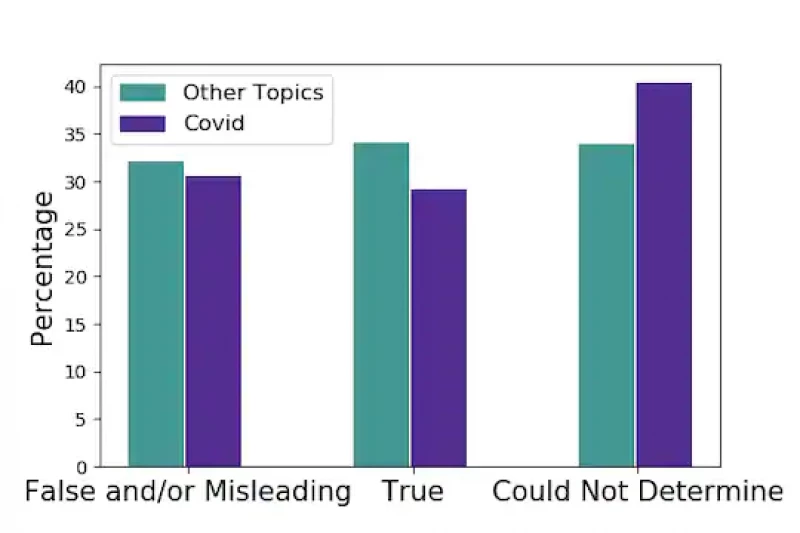

However, for each article the professional fact-checkers rated “false/misleading,” the study participants were as likely to say it was true as they were to say it was false or misleading. Roughly one-third of the time they told us they were unable to determine the veracity of the article. In other words, people on the whole were unable to correctly classify false or misleading news.

How did people gauge coronavirus-related misinformation?

Between Jan. 22 and Feb. 6 — this was the first two weeks after confirmed cases of the coronavirus appeared outside of China, but before any social distancing orders were in place in the United States — four of the articles in our study that fact-checkers rated as false or misleading were related to the coronavirus.

All four articles promoted the unfounded rumor that the virus was intentionally developed in a laboratory. Although accidental releases of pathogens from labs have previously caused significant morbidity and mortality, in the current pandemic multiple pieces of evidence suggest this virus is of natural origin. There’s little evidence the virus was manufactured or altered.

When we asked people to rate the veracity of these four articles, the results mirrored those from the full study: Only 30 percent of participants correctly classified them as false or misleading.

Additionally, on average, respondents seemed to have more trouble deciding what to think about false covid-19 stories, leading to a higher proportion of “could not determine” responses than we saw for the stories on other topics our professional fact-checkers rated as “false/misleading.” This finding suggests it may be particularly difficult to identify misinformation in newly emerging topics (although further research would be needed to confirm this).

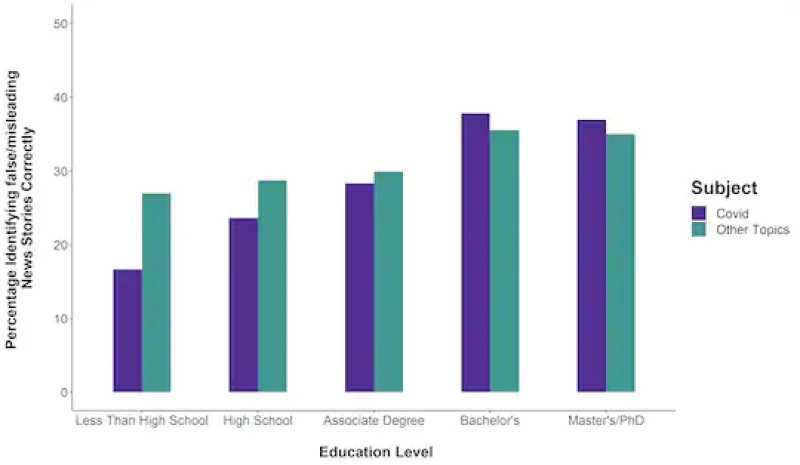

Education matters, a little

Study participants with higher levels of education did better on identifying both fake news overall and coronavirus-related fake news — but were far from being able to correctly weed out misinformation all of the time. In fact, no group, regardless of education level, was able to correctly identify the stories that the professional fact-checkers had labeled as false or misleading more than 40 percent of the time.

What do these findings reveal?

Taken together, our findings suggest there is widespread potential for vulnerability to misinformation when it first appears online. This is especially worrying during the current pandemic, when governments, public health officials and the media are constantly updating information. Nothing we saw in our data suggested the potential for misinformation on covid-19 was any lower than the overall findings — though it’s important to note that our study did not include misinformation related to cures or treatments.

How individuals respond to the pandemic will depend, in large part, on the quality of information to which they are exposed — and the stories and the reports they find credible. In the current environment, misinformation has the potential to undermine social distancing efforts, to lead people to hoard supplies, or to promote the adoption of potentially dangerous fake cures.

Since the outbreak of covid-19, platforms such as Facebook and Twitter have been inundated with a diversity of false narratives, ranging from accusations of partisan hoaxes to medical misinformation propagating unverified treatments. This suggests the rapidly changing information environment surrounding covid-19 has the potential to magnify the vulnerabilities revealed by previous studies of online misinformation.

The four news articles included in our study, covering only a single rumor, are hardly representative of what social media users are seeing online. While more research is needed about other types of coronavirus-related misinformation, our findings suggest non-trivial numbers of people will believe false information to be true when they first encounter it. And it suggests that efforts to remove coronavirus-related misinformation will need to be swift — and implemented early in an article’s life cycle — to stop the spread of something else that’s dangerous: misinformation.

Zeve Sanderson is the executive director of the NYU Center for Social Media and Politics.

Kevin Aslett is a postdoctoral researcher at the NYU Center for Social Media and Politics.

Will Godel is a PhD student in NYU’s Department of Politics and a graduate research affiliate of the NYU Center for Social Media and Politics.

Nathaniel Persily is the James B. McClatchy Professor of Law at Stanford University and co-director of Stanford’s Cyber Policy Center.

Jonathan Nagler is a professor of politics at NYU and co-director of the NYU Center for Social Media and Politics.

Richard Bonneau is a professor of biology and computer science at NYU and co-director of the NYU Center for Social Media and Politics.